|

I received my Ph.D. in Artificial Intelligence from the College of Engineering in Seoul National University, where I was advised by Prof. Nojun Kwak in the Machine Intelligence and Pattern Analysis Lab (MIPAL). Previously, I obtained my bachelor's degree from the College of Agriculture and Life Sciences, SNU, majoring in Agricultural Economics. My research interests lie in computer vision, deep learning, and neural rendering. Much of my recent work has focused on the efficient training framework of NeRF/3D-GS and synthetic data training leveraging diffusion models. On the job market now—looking for research scientist/engineer roles where I can contribute, learn, and collaborate with great folks. Always happy to chat! Email / CV / Google Scholar / LinkedIn / Github |

|

|

|

|

Sep. 2025: One paper is accepted to SIGGRAPH Asia 2025. (Journal Track) Aug. 2025: Officially graduated from SNU with the Outstanding Dissertation Award! 🥳 May 2025: Successfully wrapped up my Ph.D. defense! 🎓 Mar. 2025: One paper is accepted to CVPR 2025 Workshop on Computer Vision for Metaverse. (Oral) Feb. 2025: I'll be heading back to Meta Reality Labs this summer for another round as a research scientist intern! Sep. 2024: I have been acknowledged as an outstanding reviewer for ECCV 2024. Apr. 2024: One paper is accepted to CVPR 2024 Workshop on Efficient Large Vision Models. Feb. 2024: I will be joining Meta Reality Labs as a research scientist intern this summer. |

|

|

|

|

Yeonjin Chang, Juhwan Cho, Seunghyeon Seo, Wonsik Shin, Nojun Kwak Under Review project page / arXiv We introduce a 3D colorization method, LoGoColor, that avoids color-averaging limitations of prior methods by generating locally and globally consistent multi-view colorized training images, enabling diverse and consistent 3D colorization for complex 360 scenes. |

|

|

Shaojie Bai*, Seunghyeon Seo*, Yida Wang, Chenghui Li, Owen Wang, Te-Li Wang, Tianyang Ma, Jason Saragih, Shih-En Wei, Nojun Kwak, Hyung Jun Kim SIGGRAPH Asia 2025 (Journal Track) project page / arXiv We present GenHMC, a generative diffusion framework that synthesizes photorealistic head-mounted camera (HMC) images from avatar renderings. By enabling high-quality unpaired training data generation, GenHMC facilitates scalable training of facial encoders for Codec Avatars and generalizes well across diverse identities and expressions. |

|

|

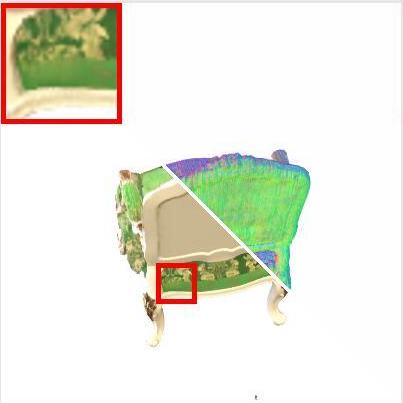

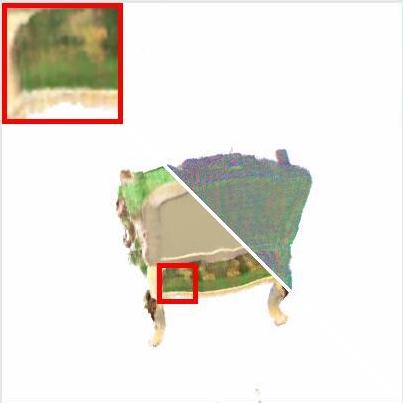

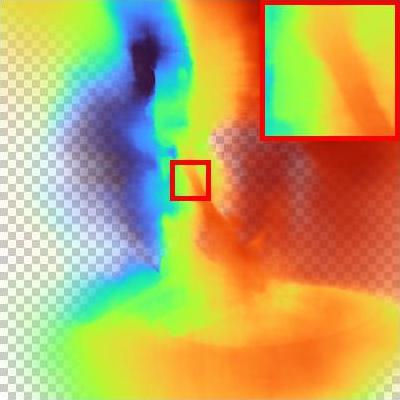

Yeonjin Chang, Erqun Dong, Seunghyeon Seo, Nojun Kwak, Kwang Moo Yi Under Review project page / arXiv We propose ROODI, a method for extracting and reconstructing 3D objects in the presence of occlusions using Gaussian Splatting. It first removes irrelevant splats based on a KNN-based pruning strategy, then completes the occluded regions using a diffusion-based generative inpainting model, enabling high-quality geometry recovery even under heavy occlusion. |

|

Ingyun Lee, Jae Won Jang, Seunghyeon Seo, Nojun Kwak Under Review arXiv We propose DivCon-NeRF, a novel ray augmentation method designed for few-shot novel view synthesis. By introducing surface-sphere and inner-sphere augmentation techniques, our method effectively balances ray diversity and geometric consistency, which helps suppress floaters and appearance artifacts often seen in sparse-input settings. |

|

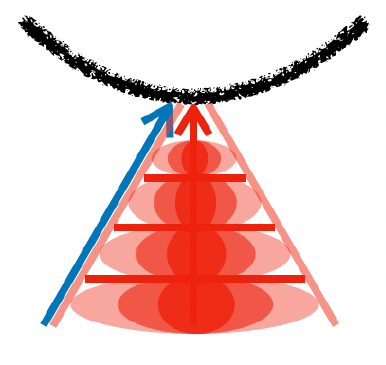

Seunghyeon Seo, Yeonjin Chang, Jayeon Yoo, Seungwoo Lee, Hojun Lee, Nojun Kwak CVPR 2025 Workshop on Computer Vision for Metaverse (Oral) project page / arXiv We introduce ARC-NeRF, a few-shot rendering method that casts area rays to cover a broader set of unseen viewpoints, improving spatial generalization with minimal input. Alongside, we propose adaptive frequency regularization and luminance consistency loss to further refine textures and high-frequency details in rendered outputs. |

|

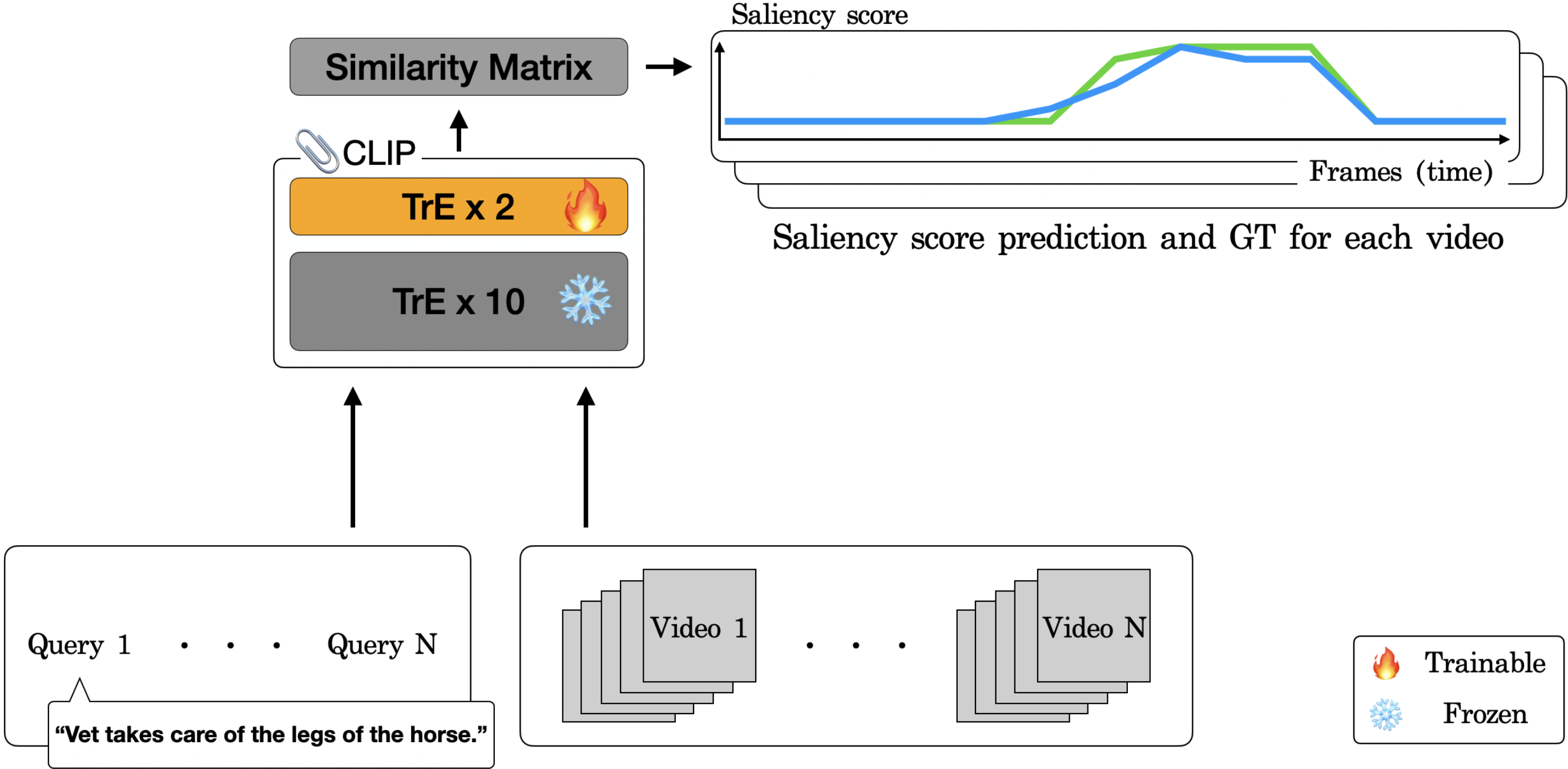

Donghoon Han*, Seunghyeon Seo*, Eunhwan Park, SeongUk Nam, Nojun Kwak CVPR 2024 Workshop on Efficient Large Vision Models arXiv We introduce HL-CLIP, a CLIP-based video highlight detection framework that leverages the strong semantic alignment of pre-trained vision-language models. By fine-tuning the visual encoder and applying a saliency-based temporal pooling technique, our method achieves state-of-the-art performance with minimal domain-specific supervision. |

|

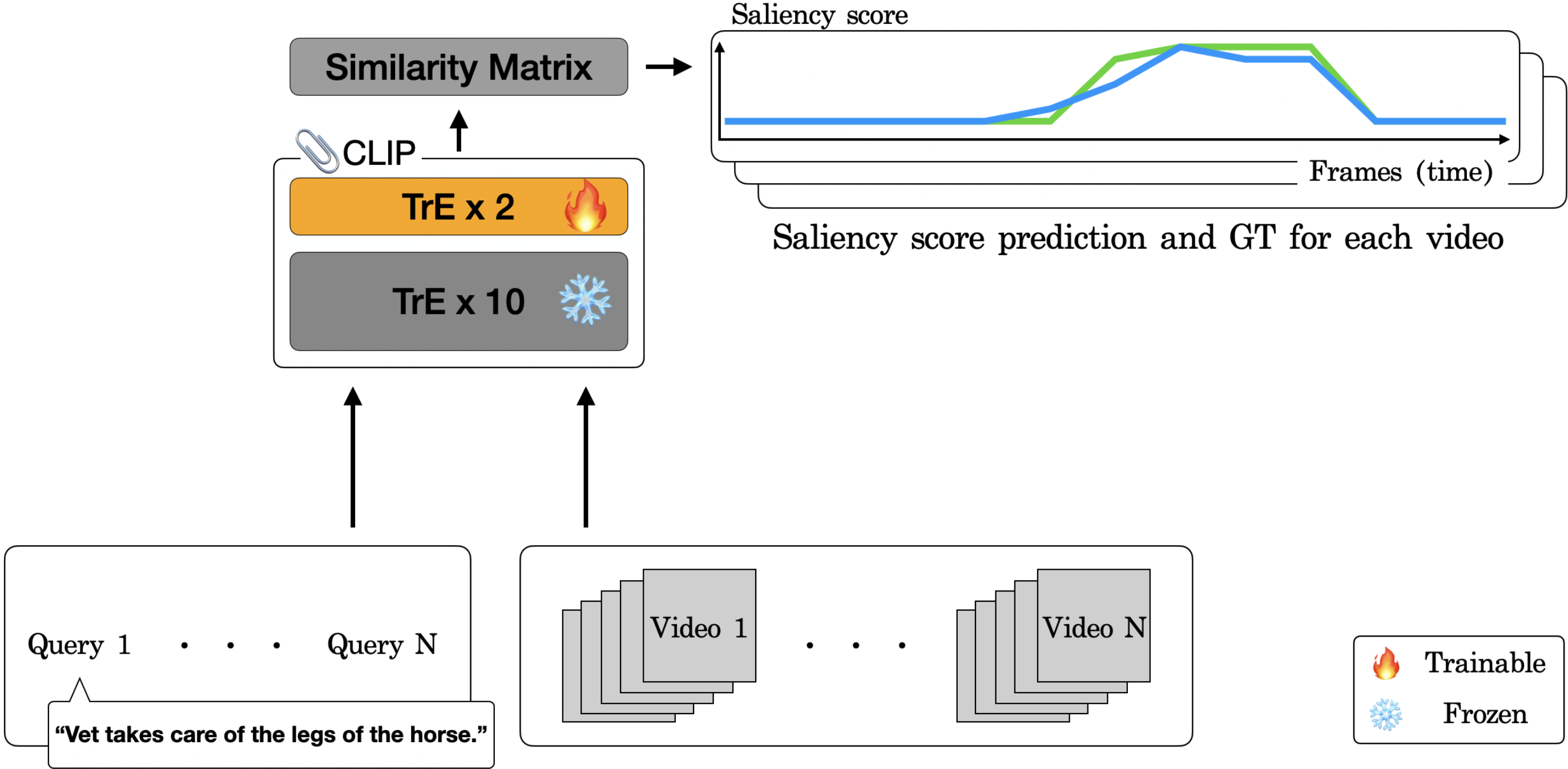

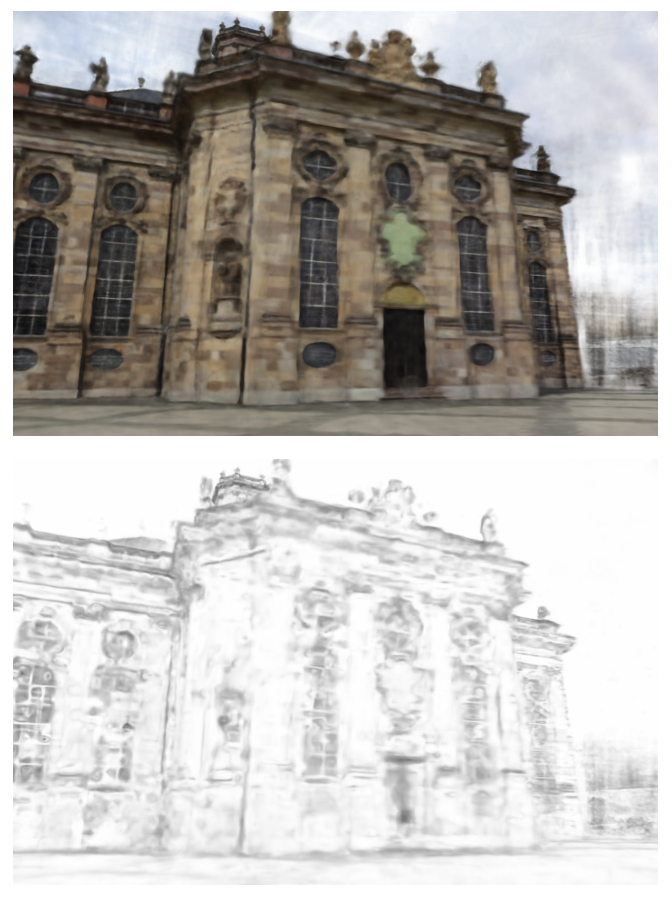

Yeonjin Chang, Yearim Kim, Seunghyeon Seo, Jung Yi, Nojun Kwak WACV 2024 arXiv We present SR-TensoRF, a sun-aligned relighting approach for NeRF-style outdoor scenes that does not rely on environment maps. By aligning lighting with solar movement and using a cubemap-based TensoRF backbone, our method enables realistic and fast relighting for dynamic outdoor scenes with consistent directional light simulation. |

|

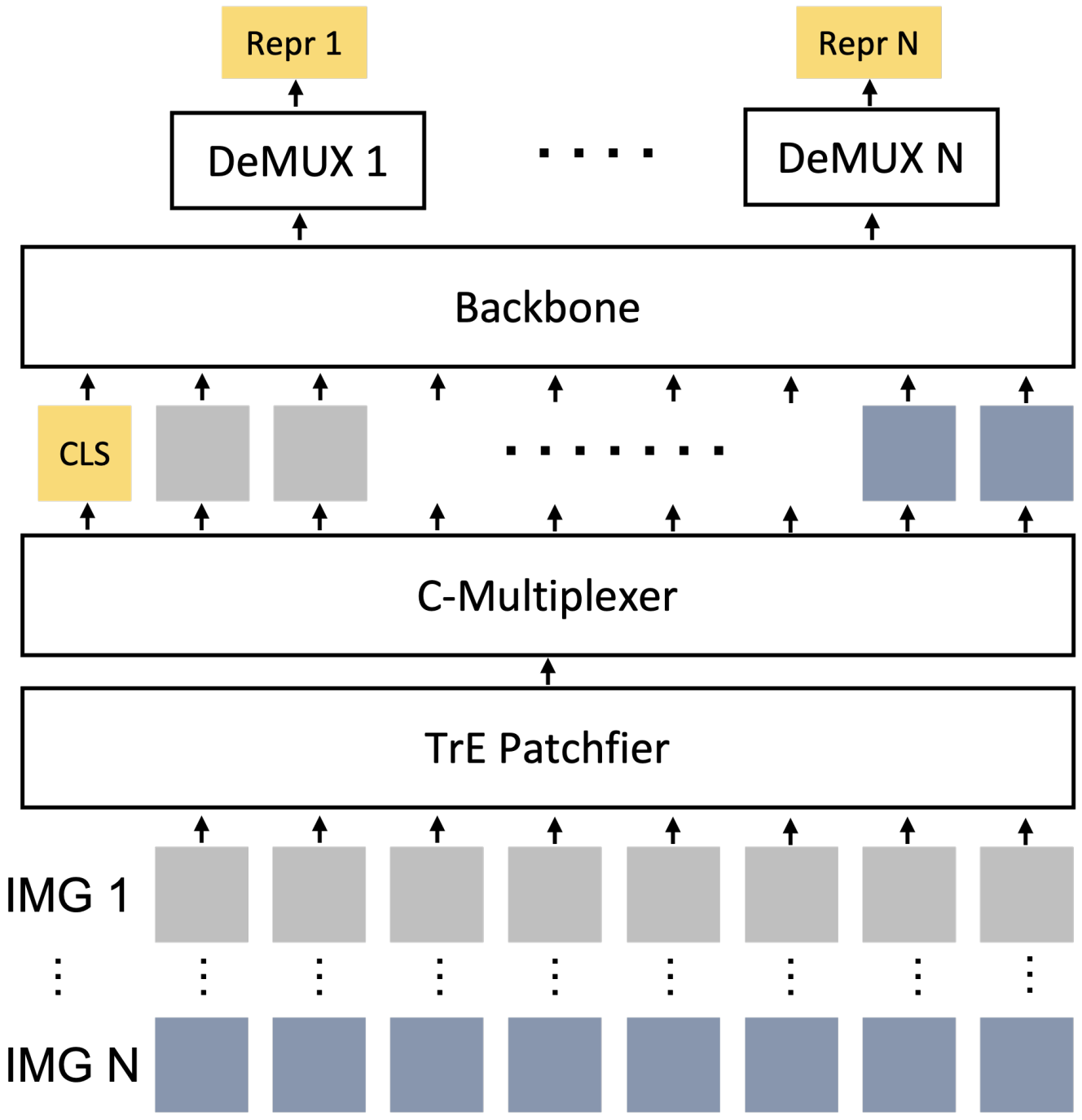

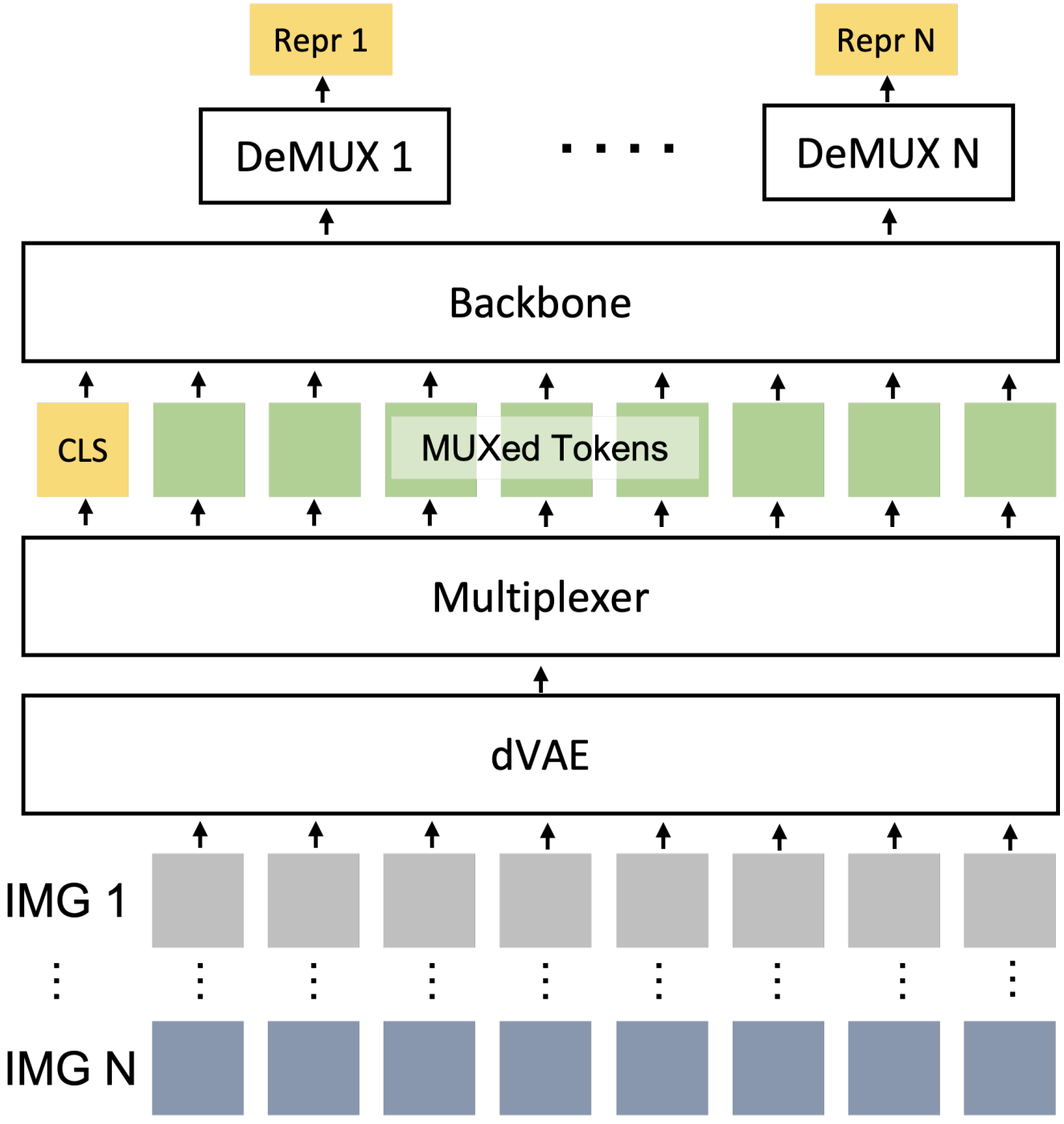

Donghoon Han, Seunghyeon Seo, DongHyeon Jeon, Jiho Jang, Chaerin Kong, Nojun Kwak NeurIPS 2023 Workshop on Advancing Neural Network Training (Oral) arXiv We propose ConcatPlexer, a simple yet effective batching strategy that accelerates Vision Transformer (ViT) inference by concatenating visual tokens along an additional dimension. This approach preserves model accuracy while improving inference throughput, requiring no architectural changes and offering easy integration into existing ViT pipelines. |

|

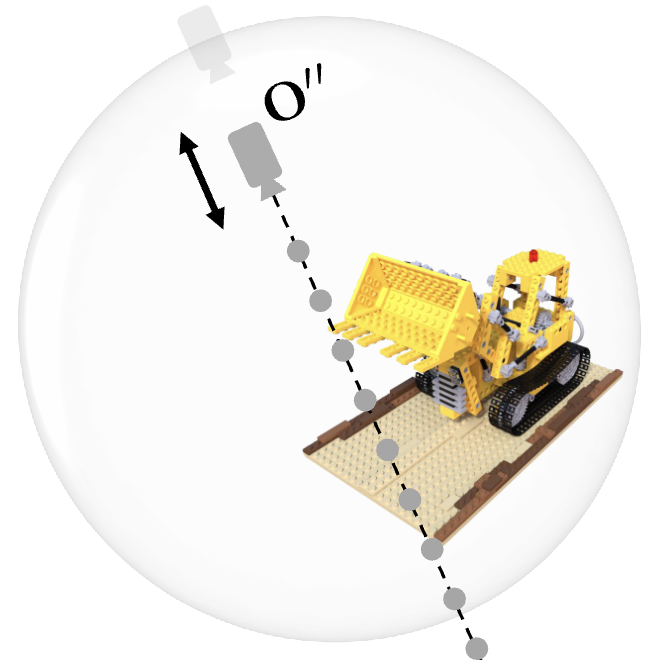

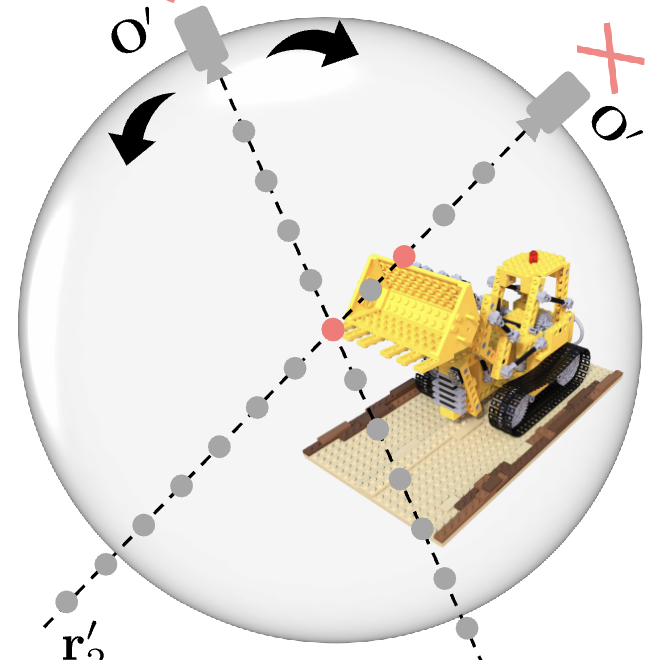

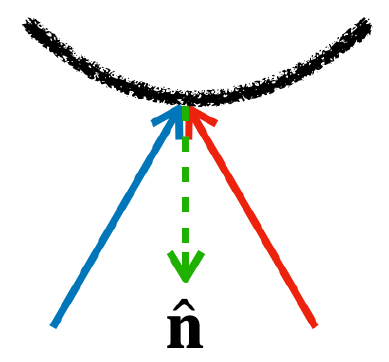

Seunghyeon Seo, Yeonjin Chang, Nojun Kwak ICCV 2023 project page / code / video / arXiv We present FlipNeRF, a framework that utilizes flipped reflection rays derived from input images to simulate novel training views. This approach enhances surface normal estimation and rendering fidelity, enabling better generalization in few-shot novel view synthesis without requiring additional images or supervision. |

|

Seunghyeon Seo, Jaeyoung Yoo, Jihye Hwang, Nojun Kwak UAI 2023 arXiv We introduce MDPose, a real-time multi-person pose estimation method based on mixture density modeling. By randomly grouping keypoints and modeling their joint distribution without relying on person-specific instance IDs, MDPose achieves high accuracy and real-time performance even in crowded scenes with complex pose variations. |

|

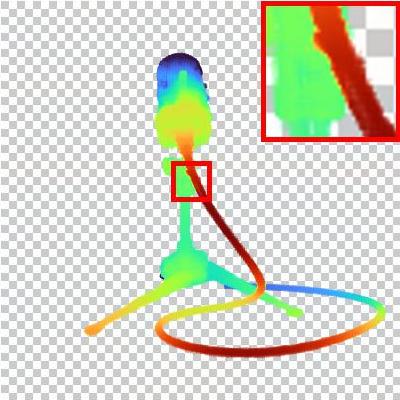

Jaeyoung Yoo*, Hojun Lee*, Seunghyeon Seo, Inseop Chung, Nojun Kwak ICML 2023 code / arXiv We present D-RMM, an end-to-end multi-object detection framework that models object locations using a regularized mixture density model. The training objective includes a novel Maximum Component Maximization (MCM) loss that prevents duplicate detections, resulting in improved accuracy and stability in both dense and sparse detection scenarios. |

|

Seunghyeon Seo, Donghoon Han*, Yeonjin Chang*, Nojun Kwak CVPR 2023 (Qualcomm Innovation Fellowship Korea 2023 Winner) project page / code / video / arXiv We propose MixNeRF, which models each camera ray as a mixture of Laplacian densities to better capture multi-modal RGB distribution in sparsely sampled scenes. Our framework includes a depth prediction auxiliary task and mixture regularization loss, allowing for more accurate novel view synthesis in few-shot NeRF settings. |

|

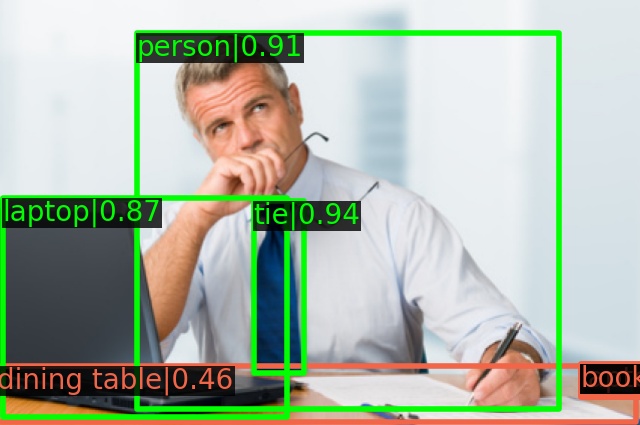

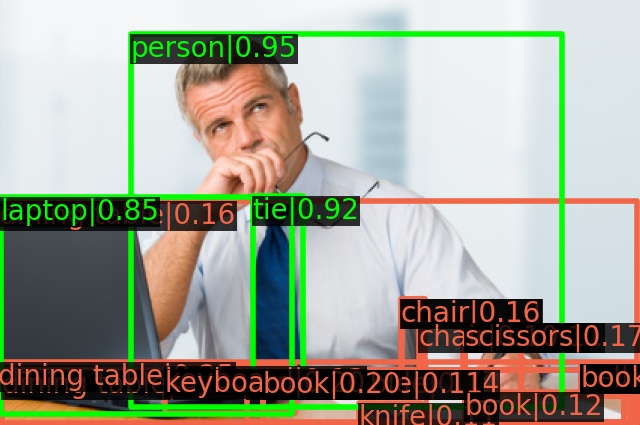

JongMok Kim, Jooyoung Jang, Seunghyeon Seo, Jisoo Jeong, Jongkeun Na, Nojun Kwak CVPR 2022 code / arXiv We introduce MUM, a semi-supervised object detection framework that applies strong spatial data augmentation by mixing image tiles and unmixing their corresponding features. This strategy allows the model to benefit from mixed inputs without corrupting label supervision, leading to improved performance in low-label regimes on COCO and VOC benchmarks. |

|

Thanks for sharing the website template, Jon Barron. :) |